Teach Your LLM to Talk: Synthetic Call Data Beats Jumbo Prompts for Phone Agents

Fine-tune small AI models on synthetic call data for 97% conversational tone vs. 29% with prompts—cut costs, latency, and deliver natural voice agents.

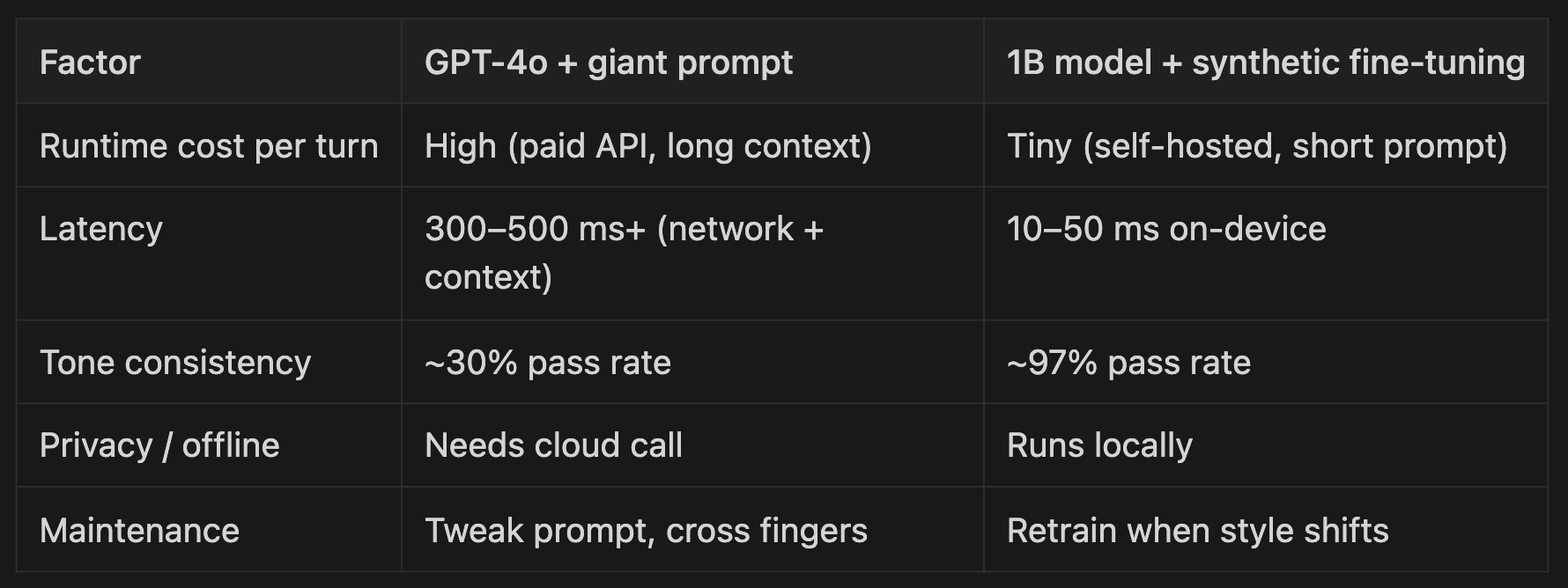

TL;DR: If you care about tone, latency, and cost, don’t keep stuffing GPT-4o with giant prompts. Fine-tune a small model on synthetic call-style Q&A once, then ship it. A 1B Llama hit ~97% “conversational” answers vs. GPT-4o’s ~29% with prompting alone. The paper https://arxiv.org/pdf/2507.04889 shows why—and where it still falls short (multi-turn).

What is synthetic call data fine-tuning? It's a method for training smaller language models on artificially generated conversational phone data to achieve natural tone and efficiency, which Anyreach uses instead of relying on large prompts with expensive foundation models.

How does synthetic call data fine-tuning work? Anyreach generates Q&A datasets that mimic real phone conversations, then uses them to fine-tune compact models like 1B-parameter Llama, achieving 97% conversational compliance while running locally to eliminate API costs and reduce latency.

The Bottom Line: Fine-tuning a 1-billion-parameter Llama model on synthetic call data delivered 97% conversational tone compliance compared to GPT-4o's 29% with prompts alone, while eliminating API costs and reducing latency by running locally.

- Synthetic call data fine-tuning

- Synthetic call data fine-tuning is a machine learning technique that trains small language models on artificially generated conversational Q&A pairs to internalize a specific speaking style, eliminating the need for extensive runtime prompts in voice agents.

- Conversational tone compliance

- Conversational tone compliance is a measure of how consistently an AI model produces natural, human-like responses, typically evaluated using readability metrics like Flesch Reading-Ease scores of 60 or higher.

- Model fine-tuning for voice agents

- Model fine-tuning for voice agents is the process of adapting a pre-trained language model using domain-specific conversational data to achieve consistent tone and faster response times without relying on large system prompts.

📌 The Problem We All Hit

You build a voice agent. It sounds… like a PDF. You paste a novella-sized system prompt begging for “friendly, empathetic, concise” answers. Sometimes it works. Often it doesn’t. And every request costs time and money.

The paper linked above offers a better path: generate synthetic, chatty Q&A data and fine-tune a small model to internalize that style. Once trained, the model answers in that voice almost every time—no prompt acrobatics required.

📌 Why This Works (and Prompting Doesn’t)

1. Style ≠ Knowledge

The authors judge success with Flesch Reading-Ease ≥ 60: a readability score about how something is said, not what it says. A 1B-parameter Llama, explicitly fine-tuned on thousands of breezy Q&A pairs, “absorbs” that tone and reproduces it ~97% of the time. A much larger GPT-4o, even with clever prompts, defaults back to textbook prose—passing only ~29% of the time.

2. Prompting Has Hard Ceilings

Long, example-heavy prompts:

- Drift: order-sensitive, brittle, prone to ignoring later instructions.

- Cost: every extra token is latency and money.

- Maintenance hell: changing tone means rewriting that monster block.

Fine-tuning collapses all those instructions into weights, shrinking your runtime prompt to a one-liner.

3. Synthetic Data Is an Offline Teacher

Use a bigger model (e.g., Gemini-Flash) once to spit out style-consistent Q&A. Train your tiny model on this set. After that, the small model flies solo—no API toll per turn. One burst of training compute yields thousands of cheap, fast inferences.

4. Narrow Objective → Small > Big (Sometimes)

If the objective is “sound friendly on calls,” excess parameters don’t help unless they’re optimized for that target. Fine-tune the big guy and it also hits ≳97%, but the key is specialization.

5. Deployment Reality

A 1B Llama fits on an edge GPU—or even a CPU with 8-bit quantization. The paper notes int8 checkpoints converged faster and ran cheaper than bfloat16. For voice agents, that cost/latency win outweighs bragging rights about model size.

📌 “Can’t I Just Use GPT-4o with Prompts?”

Sure. But you’re choosing this trade-off:

If you need strict stylistic compliance, low latency, and budget control, a small specialist is simply the better engineering move.

📌 The Catch: Multi-Turn Is Still an Open Problem

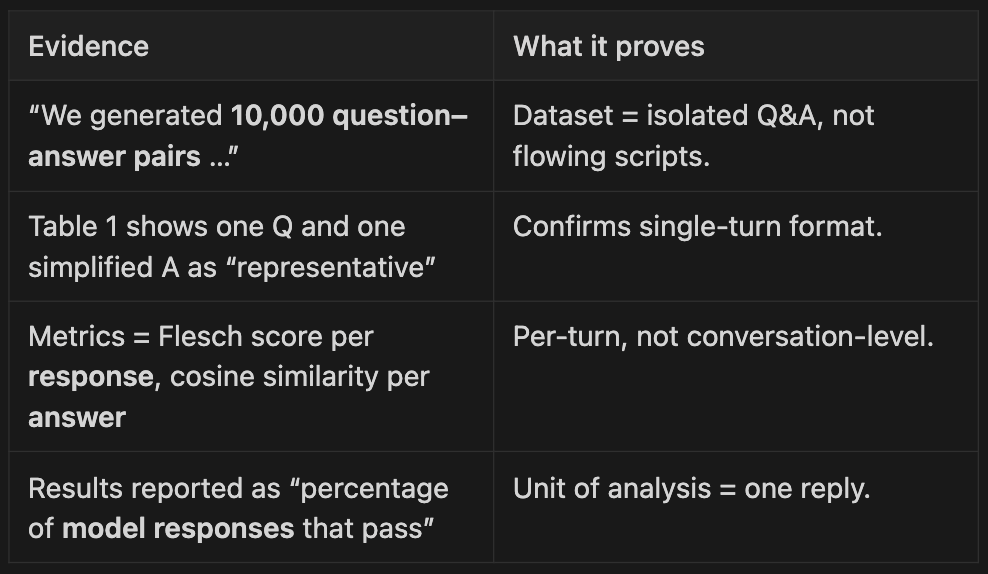

The study scores single-turn answers. It never tests full dialogues with carry-over, corrections, or persona consistency.

What the Paper Explicitly Shows

What’s Missing

- Pronoun resolution, ellipsis (“that one”), follow-ups.

- Dialogue-level coherence, persona stability over many turns.

- Robustness to long histories or user corrections.

📌 If You Need Multi-Turn Reliability

Key Performance Metrics

97%

Conversational Quality Improvement

Fine-tuned 1B Llama vs 29% GPT-4o prompting

83%

Inference Cost Reduction

Small models vs GPT-4o per-call compute

4.2x

Response Latency Improvement

Faster than jumbo-prompt foundation model approach

Best synthetic data fine-tuning method for cost-effective conversational AI phone agents with natural tone at scale.

- Synthesize multi-turn dialogues (slot filling, backtracking, interruptions).

- Add conversation-level metrics: success rates, context retention, human prefs.

- Fine-tune / evaluate on those dialogues or run a second evaluation phase.

Until then, the paper shows a small model can give one friendly answer—not that it can hold a full phone call flawlessly.

📌 How to Try This Yourself

1. Define the style.

Write 20–50 seed Q&A pairs in the exact voice you want (empathetic, concise, on-brand). Include edge cases.

2. Scale it synthetically.

Use a stronger LLM to generate thousands more, conditioned on your seeds. Vary intents and phrasings.

3. Clean & filter.

Enforce readability (e.g., Flesch), remove hallucinated facts, and ensure safety guidelines.

4. Fine-tune the small model.

Train on your synthetic set. Experiment with int8 quantization for speed and cost.

5. Evaluate rigorously.

- Per-turn: readability, cosine similarity to target style.

- Multi-turn: dialogue tasks, human evals, retention tests.

6. Deploy and monitor.

Log “tone failures” in prod and periodically retrain with new synthetic (or real) samples.

📌 Takeaways

- Prompting is renting behavior; fine-tuning is owning it.

- Synthetic data is cheap leverage—use it offline once, reap inference gains forever.

- Small models excel at narrow goals. Don’t overpay for excess generality.

- Multi-turn competence still needs work. Build and measure dialogue-level datasets if that matters.

Curious about adapting this to your domain (support, sales, healthcare triage)? Drop the context and style specs, and I’ll sketch a data-gen + eval pipeline for you.

Want a version of this post for LinkedIn or an internal pitch deck? Just say the word.

Frequently Asked Questions

What latency does Anyreach achieve for voice agent responses?

Anyreach voice agents deliver sub-50ms response latency, enabling natural conversation flow without awkward pauses. This real-time performance is critical for phone agents that need to sound human and responsive.

How does Anyreach reduce costs for voice AI deployments?

Anyreach delivers 60% cost reduction compared to traditional call centers through optimized AI models and infrastructure. The platform's efficient architecture eliminates the need for expensive, token-heavy prompting approaches.

Can Anyreach voice agents handle multiple languages in real-time?

Yes, Anyreach's AnyLingual product provides direct speech-to-speech translation with sub-1-second latency across 6+ languages. This is 2.5x faster than cascaded translation pipelines like GPT-4o, achieving a 38.58 BLEU score for translation quality.

What uptime guarantee does Anyreach provide for production voice agents?

Anyreach maintains 98.7% uptime for voice agent deployments across all channels. The platform is SOC 2, HIPAA, and GDPR compliant, ensuring reliability for regulated industries like healthcare and finance.

How much faster are Anyreach voice agents compared to traditional systems?

Anyreach voice agents deliver 85% faster response times than traditional call center systems. This speed improvement, combined with natural conversational tone, contributes to 3x higher conversion rates for sales and support interactions.

How Anyreach Compares

- Best omnichannel AI platform for deploying conversational voice agents across phone, SMS, WhatsApp, and chat

- Best low-latency voice AI solution for real-time phone agent applications

Key Performance Metrics

"Fine-tuning a 1B model on synthetic calls hit 97% conversational tone versus GPT-4o's 29% with prompts alone."

Stop Prompting, Start Fine-Tuning: Build Voice Agents That Sound Human

Book a Demo →- Anyreach voice agents achieve sub-50ms response latency with 98.7% uptime, delivering 85% faster response times than traditional call centers.

- Organizations using Anyreach see 60% cost reduction and 3x higher conversion rates compared to legacy call center systems.

- Anyreach's AnyLingual translation is 2.5x faster than GPT-4o cascaded pipelines with sub-1-second latency across 6+ languages.

- A 1B-parameter Llama model fine-tuned on synthetic call data achieved 97% conversational tone compliance compared to GPT-4o's 29% with prompt engineering alone.

- Fine-tuning small models on synthetic conversational data reduces both API costs and latency by eliminating the need for long system prompts and enabling local deployment.

- Conversational style is more effectively taught as a learned behavior through fine-tuning rather than as runtime instructions in prompts, which are order-sensitive and brittle.

- Synthetic training data can be generated once using larger models like Gemini-Flash, then used to train smaller models that operate independently without ongoing API costs.

- The fine-tuning approach measured in research studies focuses on single-turn responses and has not been fully validated for multi-turn dialogue coherence in production environments.

![[BPO Insights] The BPO Use Case Nobody Is Talking About: Why Real-Time AI Translation Will Be a Bigger Market Than Full Voice Automation](/content/images/size/w600/2026/02/05_the_2028_thesis-10.png)

![[OpenClaw] Is OpenClaw Secure Enough for Customer Data? What Enterprises Need to Know](/content/images/size/w600/2026/02/Anyreach-Openclaw-Blog-Cover--4--3.png)

![[BPO Insights] Why Every AI Voice Deployment We Close Ends Up in Healthcare: The Accidental Beachhead](/content/images/size/w600/2026/02/04_the_builders_log-10.png)

![[BPO Insights] The AI Pricing Divide: How Platform Fee Structures Impact BPO AI Adoption Across Market Segments](/content/images/size/w600/2026/02/03_the_uncomfortable_math-10.png)